This software can help you “poison” your photos against AI fakery

As we recently covered, the problem of deepfakes and AI-based photo manipulation is no joke in the harm it can cause.

Now, software tools are emerging to counteract these rather specific problematic parts of modern photo/video manipulation. One example of these is a program called Photoguard, recently developed by a team of researchers working at MIT.

With Photoguard, the main claim is that it can deny AI tools the ability to convincingly modify individual photos.

The researchers that developed the software have been let by computer professor Aleksander Madry and have published a paper that demonstrates how their software tools works against unwanted AI edits.

Their detailed report is titled “Raising the Cost of Malicious AI-Powered Image Editing”. As its title implies, it doesn’t necessarily promise that AI deepfakes can be concretely stopped, only that they can be made prohibitively difficult.

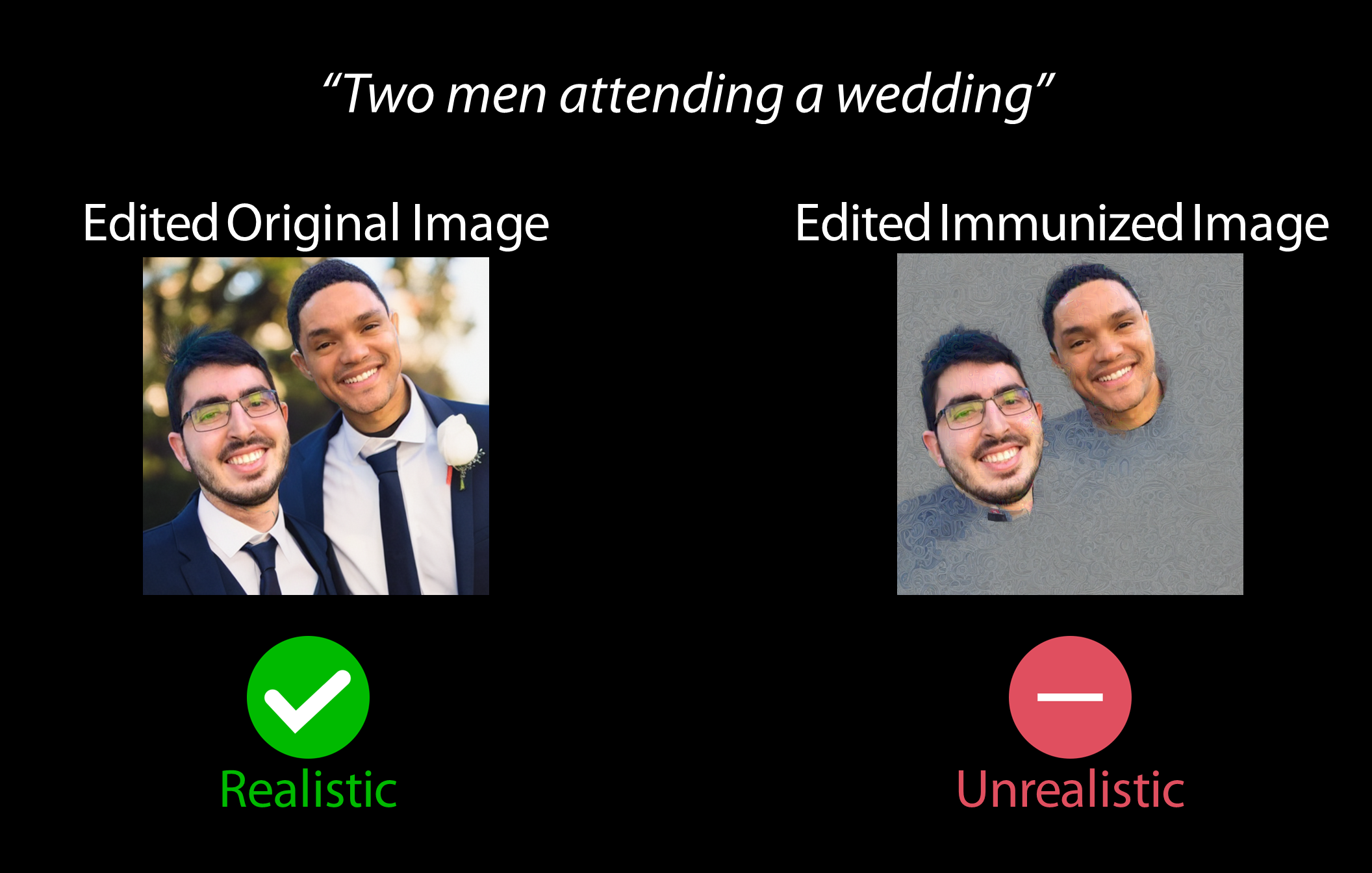

The essential claim of the MIT researchers is that Photoguard can “immunize” photos against AI editing through data contamination.

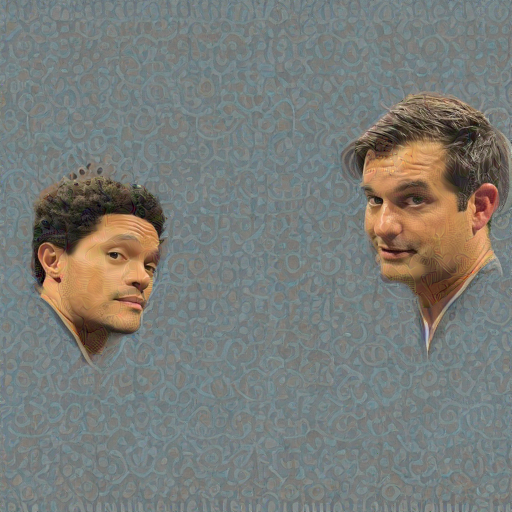

In other words, the software will manipulate pixels in an image in a way that creates invisible noise for that photo. This noise then makes AI editing systems unable to create realistic edits to the photo. Instead, the parts of the image with the noise are left visibly distorted.

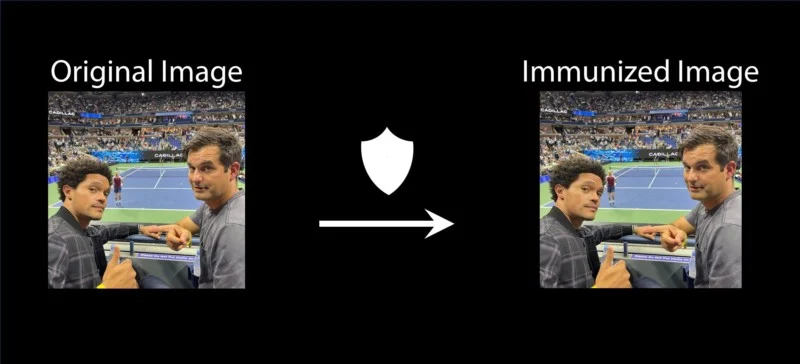

One of the images shown by the researchers in their paper demonstrates how Photoguard works by showing a real, completely unmodified photo of comedians Trevor Noah and Michael Kosta at a tennis match.

This photo is then easily edited by an AI to show their faces in a completely different context with the stadium behind them in the real photo gone.

The researchers then add their invisible noise to the original photo and have an AI attempt to edit it again. This fails, instead showing a sort of grayish repeating patter around the faces of the two comedians.

Madry also tweeted images in 2022 demonstrating how well his team’s software worked at spoiling AI manipulation of other photos.

Last week on @TheDailyShow, @Trevornoah asked @OpenAI @miramurati a (v. important) Q: how can we safeguard against AI-powered photo editing for misinformation? https://t.co/awTVTX6oXf

My @MIT students hacked a way to “immunize” photos against edits: https://t.co/zsRxJ3P1Fb (1/8) pic.twitter.com/2anaeFC8LL

— Aleksander Madry (@aleks_madry) November 3, 2022

Another researcher, Haiti Salman, explained in a November interview with the site Gizmodo that Photoguard can introduce its noise in just seconds, making it usable for mass photo protection.

The PhD student also explained that the software works better the higher an image’s resolution is, because the extra pixels allow for more invisible distortion.

What the research team hopes to see is this technology being applied automatically to photos posted on the web and in social media for the sake of making most digital photos unusable and largely impervious to AI manipulation.

If this were to happen, it wouldn’t apply to all the billions of photos that are already available for AI manipulation on the world’s social media sites, but it could be a useful defense for future photo uploads by users.

On the other hand, social media platforms are already moving into using scraped images for their own AI training. This might make them hesitant about “poisoning future photo submissions against their own AI.

Furthermore, there are no guarantees that future AI technology or updated iterations of current AI tech won’t find a way around software like Photoguard.

For now, it’s at least good to see that someone making efforts to counteract the often blatant use of deepfakes and unwanted AI editing against people’s photos online.

The MIT blog has more details on Photoguard here and if you want a really detailed exploration of how this software works, the original PDF research paper by Madry and his team is worth a look.

Recent cases of AI being used extremely abusively include cases of pedophiles taking real photos of children from the web for their own generated AI imagery, and image hackers using the faces of Twitch streamers for deepfakes porn videos.

Check out these 8 essential tools to help you succeed as a professional photographer.

Includes limited-time discounts.